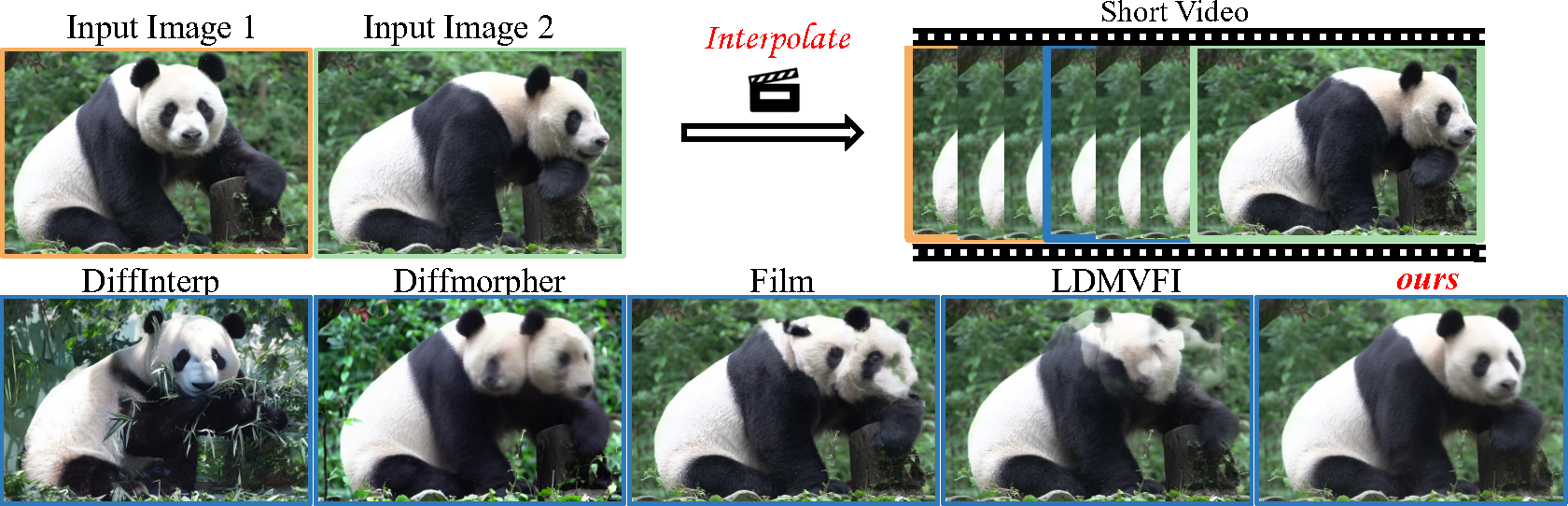

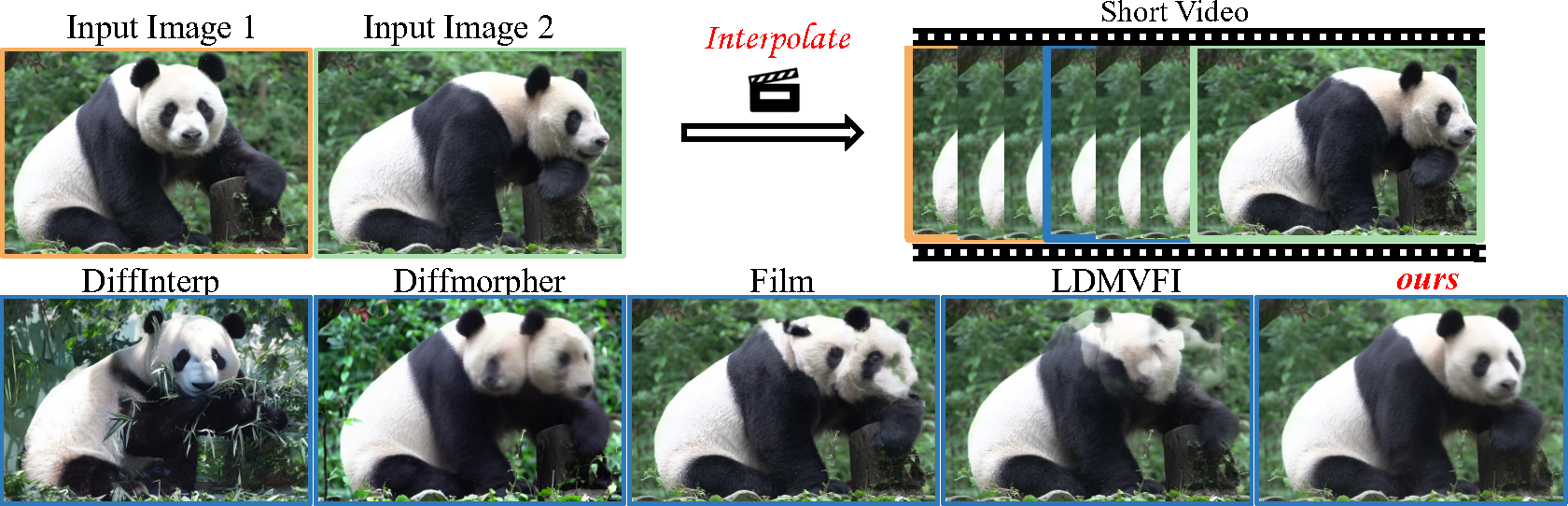

Given two input images with large motion, our proposed method can generate a short video with high fidelity and semantic consistency compared to previous approaches.

1Huazhong University of Science and Technology 2Adobe Research

Given two input images with large motion, our proposed method can generate a short video with high fidelity and semantic consistency compared to previous approaches.

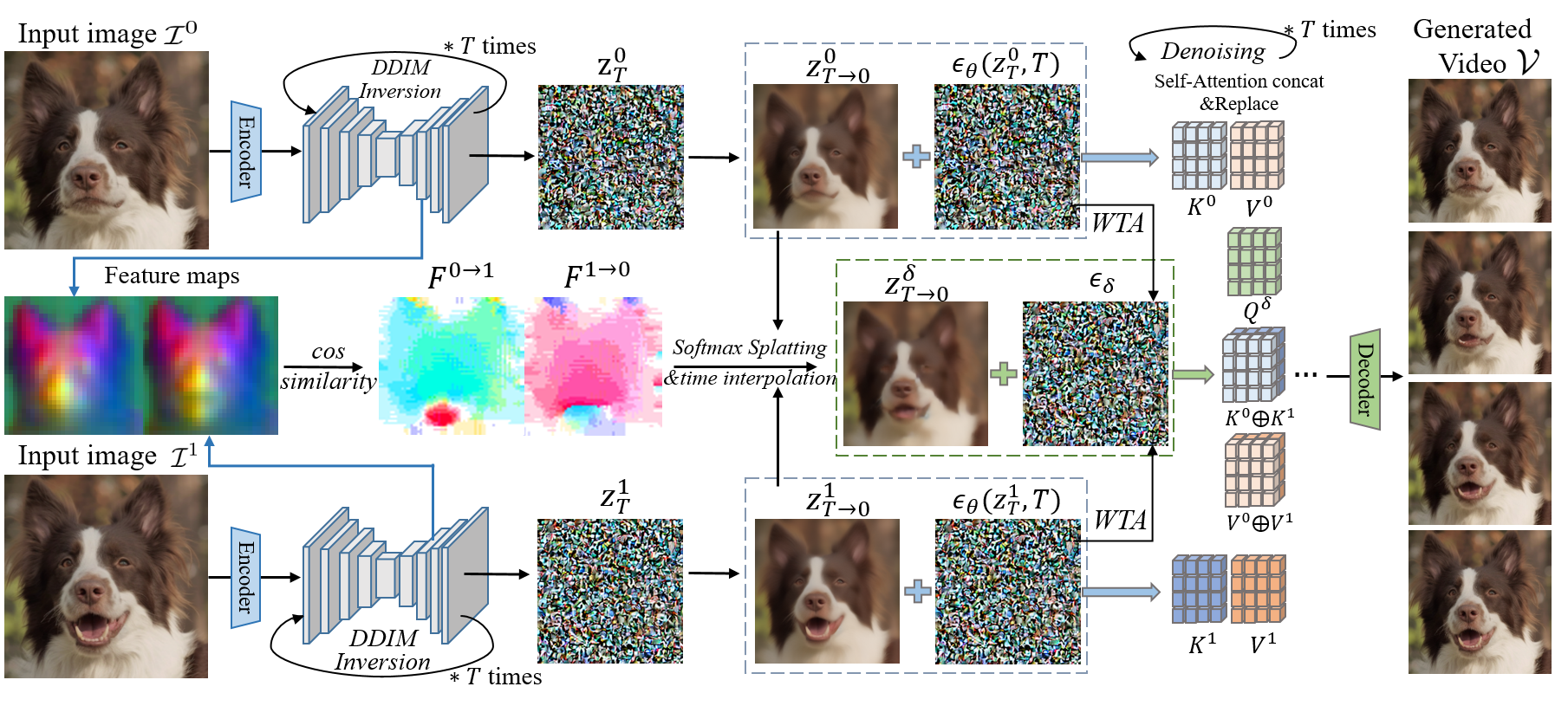

We study the problem of generating intermediate images from image pairs with large motion while maintaining semantic consistency. Due to the large motion, the intermediate semantic information may be absent in input images. Existing methods either limit to small motion or focus on topologically similar objects, leading to artifacts and inconsistency in the interpolation results. To overcome this challenge, we dig into the pre-trained image diffusion models for their capabilities in semantic representations and generations, which ensures consistent expression of the absent intermediate semantic representations with the input. To this end, we propose DreamMover, a novel image interpolation framework with three main components: 1) A natural flow estimator based on the diffusion model that can implicitly reason about the semantic correspondence between two images. 2) To avoid loss of detailed information during fusion, our key insight is to fuse information in two parts, high-level space and low-level space. 3) To enhance the consistency between the generated images and input, we propose the self-attention concatenation and replacement approach. Lastly, we present a challenging benchmark dataset called InterpBench to evaluate the semantic consistency of generated results. Extensive experiments demonstrate the effectiveness of our method. Code will be released soon.

Given two input images, each is fed into the pre-trained diffusion model for adding noise via DDIM inversion. We extract feature maps from up-blocks of U-Net, and leverage them to get the pixel correspondences of the two images to yield the bidirectional optical flow. We decompose the noisy latent code into low-level and high-level components. To maintain the high-frequency information of images, we perform softmax splatting and time interpolation on high-level space. As for low-level space, we replace all weighted average operations with "Winner-Takes-All"(WTA). In addition, we propose a novel self-attention concatenation and replacement method for consistency. Finally, our method can generate a sequence of high fidelity and consistency interpolation frames.

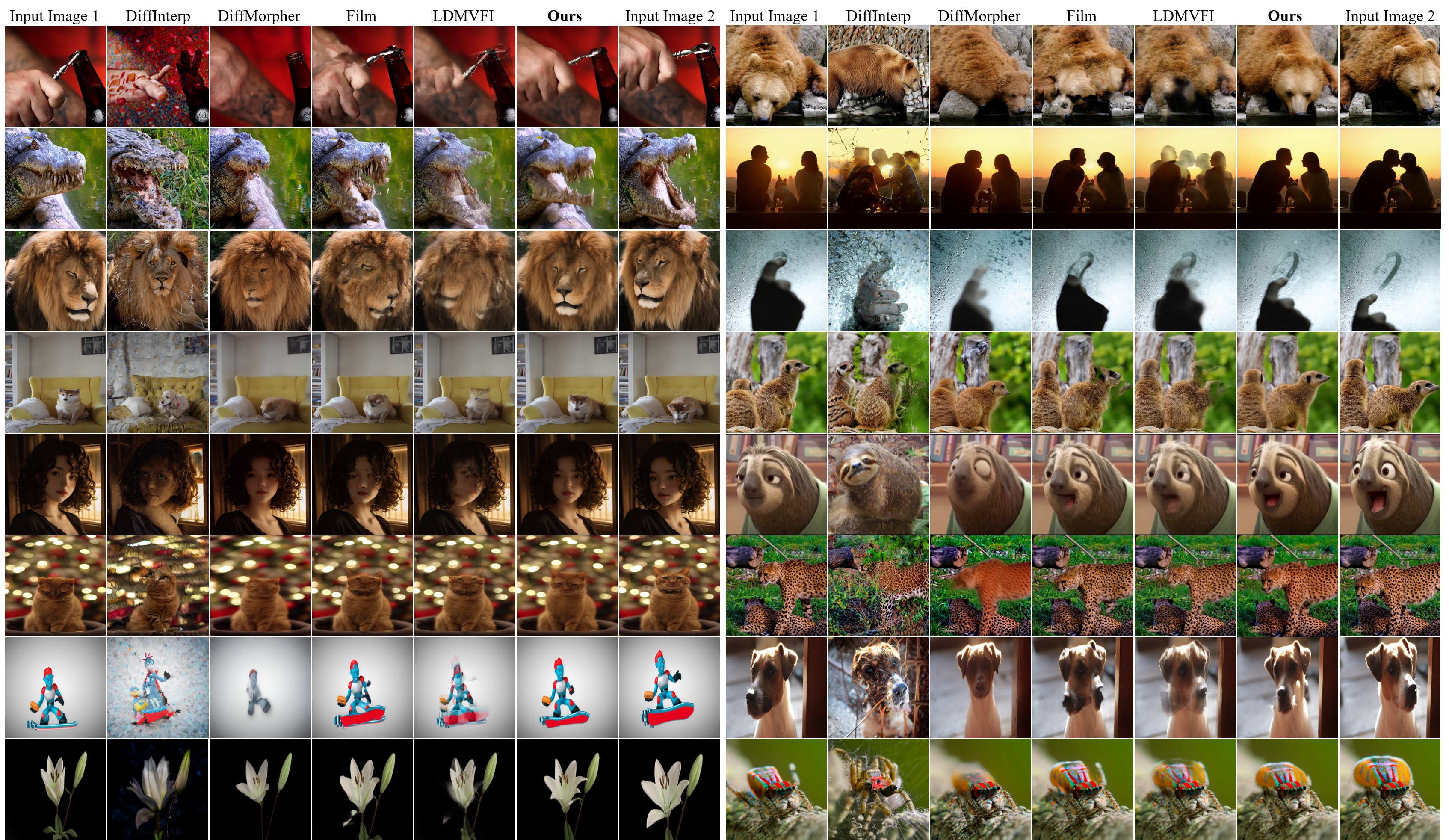

More Visualization Comparison of baselines and our method. We show the middle-most image obtained by all methods. Our approach generates intermediate results that maintain the best semantic consistency.

@article{shen2024dreammover,

title={DreamMover: Leveraging the Prior of Diffusion Models for Image Interpolation with Large Motion},

author={Shen, Liao and Liu, Tianqi and Sun, Huiqiang and Ye, Xinyi and Li, Baopu and Zhang, Jianming and Cao, Zhiguo},

journal={arXiv preprint arXiv:2409.09605},

year={2024}

}